You may have heard the term explainable AI before, but what does it mean? And why is it required?

According to Google, explainable AI is “a set of tools and frameworks to help you understand and interpret predictions made by your machine learning models.”

Essentially, it’s being able to explain why your AI system made the decisions it made. This is a real challenge, given the way that AI algorithms work.

A few months ago, I had the pleasure of seeing Laurence Moroney, AI Advocate at Google, speak at London Tech Week. Laurence gave a brilliant account of the difference between traditional programming and machine learning.

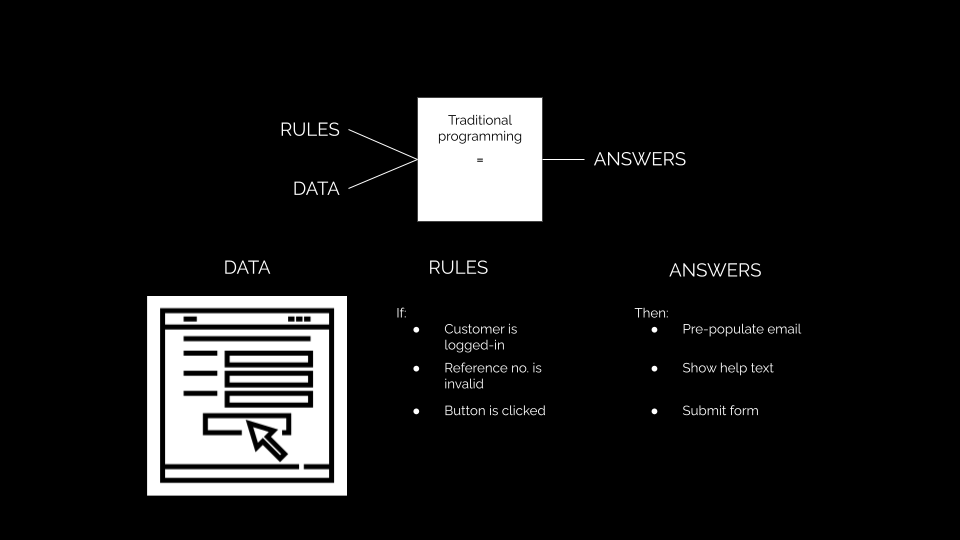

Laurence explains that traditional programming, the kind of software development that every programmer and software engineer is familiar with, consists of the creator providing rules and data, and those two things together provide answers.

For example, let’s say you wanted to build a form to allow somebody to subscribe to your newsletter. You would provide data (the customer’s typed response), then create rules (when the Submit button is pressed, send the data from X form field into Y database), and finally, you’d have your answer (subscription successful).

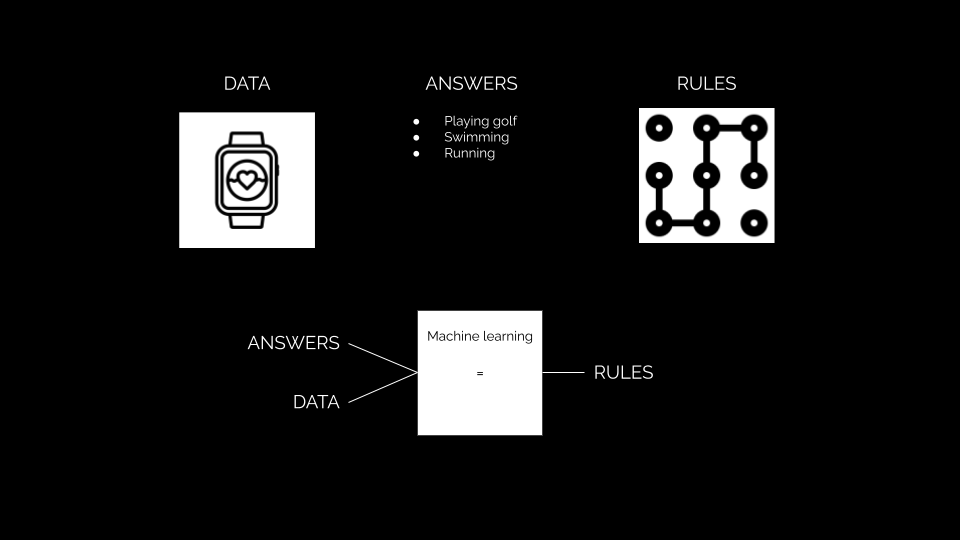

With a machine learning algorithm, you are instead providing the answers and the data, which will produce the rules.

Laurence provides the example of a smart watch. For a smartwatch to detect that somebody is walking or running, playing golf or tennis, you couldn’t write enough programming rules to recognise this. It’s too complex.

Instead, you would have somebody wear a sensor and perform those activities. This would generate the data (the movements and heart rate associated with X activity). You’d provide the system with the answer (a label of the type of activity being performed) and that would enable the algorithm to figure out the rules (when somebody is moving like this and the heart rate is that and these other signals are the other, then it is likely they are running).

If this makes sense, why the need for explainable AI?

I spoke recently with Mara Cairo, Product Owner at Amii, who added onto this analogy, the perfect reason why explainable AI is important.

That is because when a machine learning algorithm generates the rules in the above scenario, the rules are so complex that a human couldn’t possibly understand them. This is also reflected in the recent conversation that Elon Musk had with British prime minister Rishi Sunak, where Elon claimed that for a human to be able to understand the full complexity of AI decision-making is completely impossible.

Therein lies the issue. We are developing algorithms and AI systems in order to identify and make decisions based on us providing ample amounts of data, and labels of ample amounts of answers. The identifying of the rules, the reasoning around those rules, and decision-making on those rules, are the parts that we do not understand.

Explainable AI seeks to fill that gap as best as it can, but the jury is still out on how realistic that notion really is.

You can catch the full interview with Mara Cairo where we discuss explainable AI in more detail, as well as how to identify an appropriate business problem to solve with AI, AI literacy and AI maturity on LinkedIn, YouTube, Apple Podcasts, Spotify or wherever else you get your podcasts.