This year, following Gartner’s Magic Quadrant for Enterprise Conversational AI Platforms, Gartner also conducted a Critical Capabilities analysis. This highlighted, according to Gartner, the top capabilities that conversational AI platforms need to have in order to be effective at enabling enterprise automation. It also scores each vendor on their ability to cater to these capabilities.

Gartner’s analysis provides a great high-level overview of these capabilities as a starting point for enterprise buyers making decisions on whichat conversational AI platform to go with. For buyers to actually make a decision, however, they need more detail.

That’s what this article is about.

I’ll present Gartner’s ranking and top-rated platforms in each category. I’ll then explain what each of the Critical Capabilities are in detail; what they mean, why they’re important and how they impact your chances of automation success. Finally, I’ll offer some capabilities and use cases of my own for consideration in next year’s analysis (and for consideration for decision makers looking to purchase a platform this year or next).

The goal of this analysis is to present enterprise buyers, decision makers and influencers with a full understanding of the capabilities they’ll need to make their automation programme a success, so that they can make informed decisions about their automation technology strategy.

What are the Critical Capabilities, specifically?

Gartner has two categories it considers ‘Critical Capabilities’, they are: Critical Capabilities and Use Cases.

- Critical Capabilities, you can think of as features. Requirements that conversational AI platforms need to cater for.

- Use Cases are how each platform packages up these critical capabilities to enable effective enterprise automation for a given suite of situations and solutions.

What vendors were included in the analysis?

The Critical Capabilities assessment takes each of the Enterprise Conversational AI Platforms that made it into the 2023 Magic Quadrant. That’s 19 vendors in total, ranging from the big cloud providers, like Google, Amazon, and IBM, to the AI-focused scale-ups like Cognigy, OneReach, Kore, Boost and others.

The full list of vendors included:

- [24]7.ai

- Aisera

- Amazon Web Services

- Amelia

- Avaamo

- Boost.ai

- Cognigy

- eGain

- IBM

- Inbenta

- Kore.ai

- Laiye

- Omilia

- OneReach.ai

- Openstream.ai

- Sinch

- Sprinklr

- Yellow.ai

Who won overall?

This year, in 2023, according to Gartner, Cognigy emerged as the leading Enterprise Conversational AI Platform for delivering the specified Critical Capabilities. Cognigy supplanted last year’s victor, OneReach, from pole position.

I don’t have direct experience with each platform on the list, but I’m familiar enough with most of them to say that each platform considered in the analysis is a capable platform in its own right. To be crowned cream of the crop is therefore a big accomplishment.

To outrank Google, Amazon and IBM is no small task, and to pip your peers to the post is considerable, given the level of competition in this field.

Lastly, to have Gartner’s weight and reputation behind this, I’m sure will be a handy reference for potential buyers. So it’s a big congratulations to Cognigy on this achievement.

Gartner’s Critical Capability Ratings

Below are a list of the Critical Capabilities, as well as the results of the analysis, including which provider came out on top in each category:

- Channels: OneReach.ai (4.9/5)

- Voice: Avaamo and Omilia (4.8/5)

- Natural Language Understanding: Cognigy (5/5)

- Dialogue management: Kore.ai (4.9/5)

- Back-end integrations: Cognigy and Kore.ai (4.9/5)

- Agent escalation: Cognigy and Kore.ai (4.9/5)

- Analytics, Continuous improvements: Amelia (4.8/5)

- Bot orchestration: Amelia and OneReach.ai (5/5)

- Life Cycle Management: Cognigy (4.8/5)

- Enterprise Administration: Amelia, Cognigy and Kore.ai (5/5)

- Deployment Options: IBM (4.9)

Gartner’s Use Case Ratings

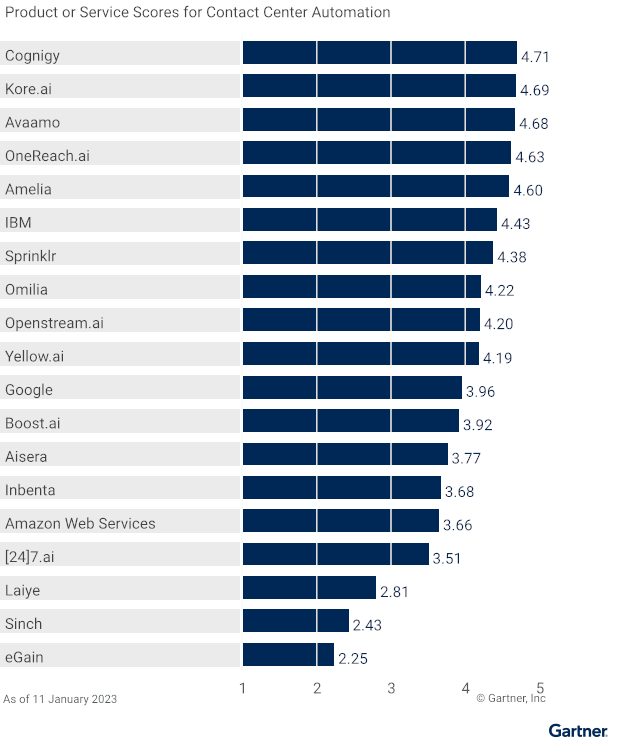

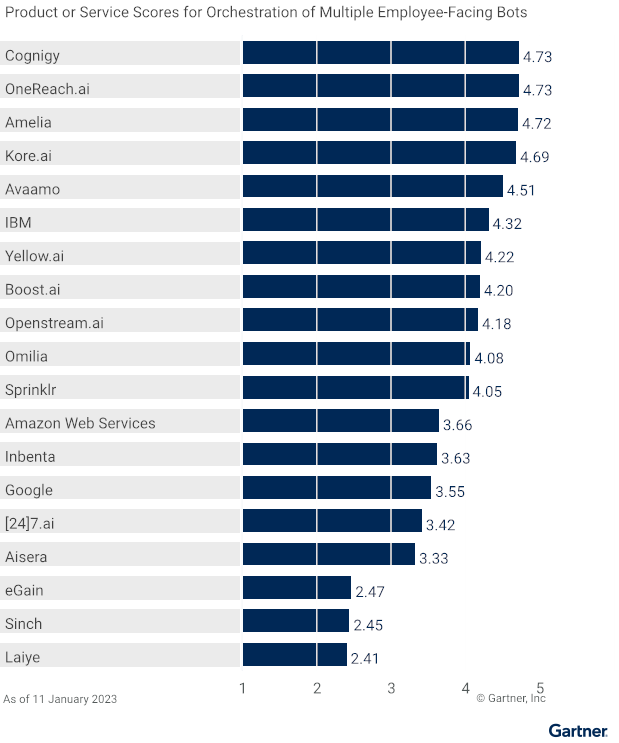

Below are the results of the analysis on Use Cases, and which provider came out on top in the below categories:

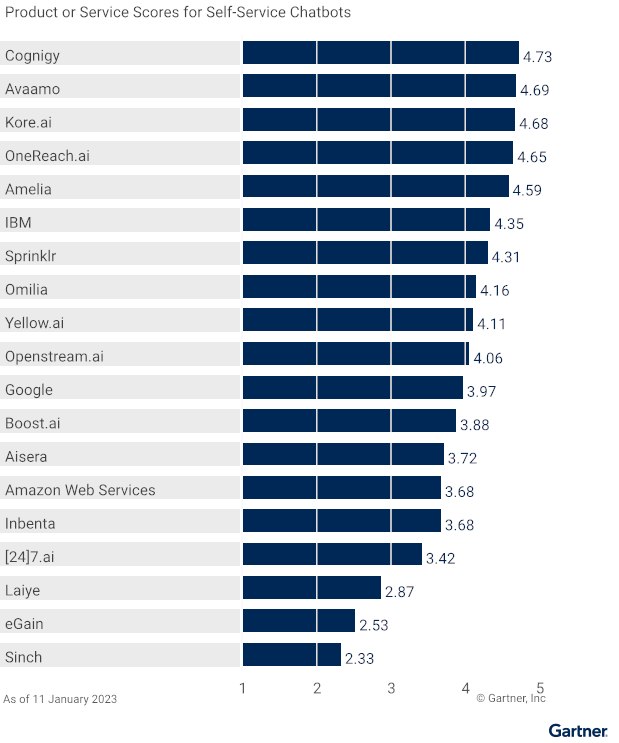

- Self-Service Chatbots: Cognigy (4.73/5)

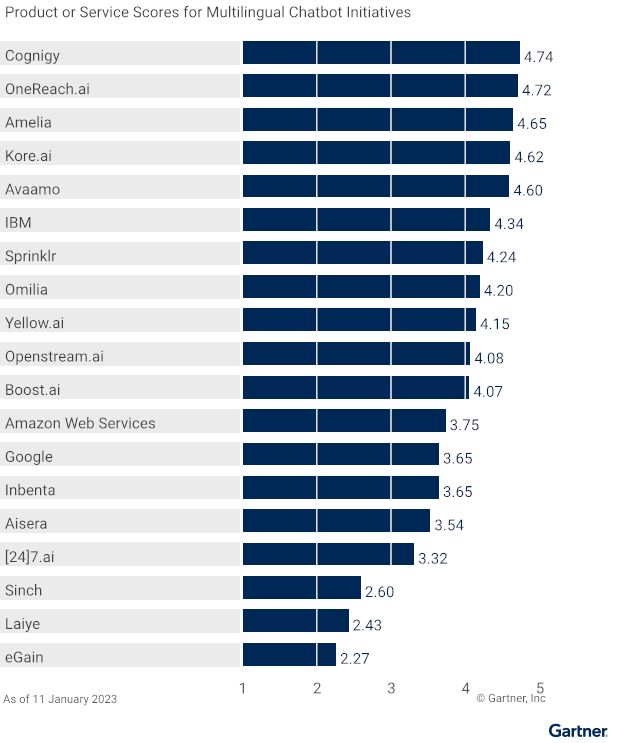

- Multilingual Chatbot Initiatives: Cognigy (4.74/5)

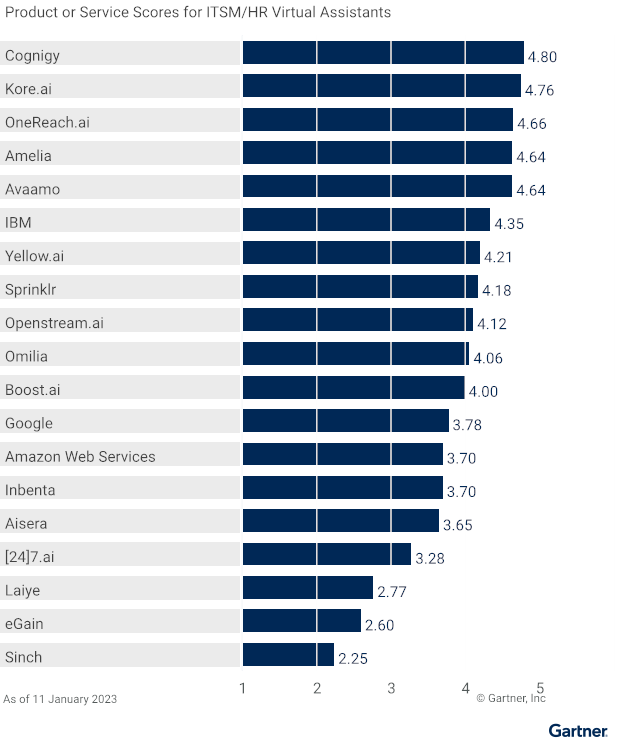

- ITSM/HR Virtual Assistants: Cognigy (4.8/5)

- Contact Center Automation: Cognigy (4.71/5)

- Orchestration of Multiple Employee-Facing Bots: Cognigy (4.73/5)

An appraisal of Gartner’s Critical Capability Categories

Listed below are the features that Gartner determines crucial for enterprise conversational AI platforms.

Under each header, in italics, is an abbreviated (or expanded) description of Gartner’s definition, followed by my interpretation and analysis of the capability.

Channels

“The ability to connect to different channels, such as messaging, chat and voice, as well as use specific rich features of channels, such as receiving images from WhatsApp and presenting buttons and carousels in chat widgets.”

No doubt this is important. For me, this should be two separate capabilities, though. One is technical, the other is design and experience focused.

First, being able to deploy into different channels is, of course, critical. How you provide this capability should be a consideration, too.

I know some providers that have multiple CPaaS vendors they partner with to enable channel integrations. I also know others who have all of this in-house. Platforms should also have the ability for customers to add their own channels through custom integrations if the vendor doesn’t support their channel of choice. Hopefully, this was considered in the analysis.

Second, being able to use the specific rich features of the channel in question is a design consideration that impacts the software capabilities of the platform. From one dialogue management interface, you want the ability to utilise the UI capabilities of the channels you’re deploying into.

For example, to select the voice you’d like to use in your CCaaS integration, yet add an image carousel for the same response in chat, as well as remove the carousel and shorten the text for SMS.

If this isn’t a separate capability, then it should at least be under dialogue management, rather than channels, because it’s more related to the conversation design component of the platform.

Voice

“The ability to understand voice input and produce voice outputs on a range of voice channels.”

Voice support is of course a crucial part of providing contact centre automation solutions, as well as deploying on specific devices such as smart speakers, in-car infotainment systems and more. Many of the vendors included in this report use third party speech-to-text (converting spoken words to text, also known as Automatic Speech Recognition or ASR) or text-to-speech (synthetic voices). This is because both of those areas require substantial investment and expertise to get right.

However, in having your own models, as a small number of vendors do, you have ultimate control. You can build a custom speech model that goes beyond acoustic modelling for your specific use case. You can also create custom voices that speak to users in your unique brand voice.

I’d like to see Gartner go beyond the requirement to simply have this capability, and deeper into the performance and pliability of these capabilities, and how well vendors support that.

Natural Language Understanding

“The ability to understand a user’s natural language input, extract entities, as well as training and customising an NLU model.”

This is definitely a critical capability and is the bedrock of understanding what users are saying. We’re on the verge of this element being commoditised, as many vendors have their own proprietary NLU, as well as having support for multiple NLU providers. Gartner doesn’t specify whether this capability is related to the former (proprietary or high performing NLU) or the latter (openness in supporting multiple vendors). Both are important and so I’d assume this broad definition includes both.

I’d also like to see more analysis on the shortlisted platform’s ability to provide NLU management capabilities both for sourcing and analysing potential training data, and for optimising models on an ongoing basis.

Dialogue management

“The ability to design and manage complex and sophisticated dialogue requirements, such as conversation and context management, memory, reasoning, disambiguation and more.”

Of course, this is a critical capability. You can’t construct a conversation without it.

Something to note here is that many platforms tackle the problem of dialogue management differently. Even the labels and names that they give things differ. Some call it ‘dialogue nodes’, others call them ‘turns’. Some call parts of conversations ‘flows’, others call them ‘sequences’. With some, you have a ‘design canvas’, with others, you have ‘pages’.

I’d like to see some deeper critique in this area in particular from Gartner and to understand the specifics of how it scored vendors, given the various ways vendors are approaching this problem and the lack of standardisation today.

Back-end integrations

“The ability to securely communicate with back-end or line-of-business systems, such as CRM, ERP and other business platforms, as well as other data sources.”

The value of a conversational AI agent is limited without integrations into line of business systems and so this is certainly a critical capability. This is where you’ll find real business value in automating core business processes.

I’d also like to see some acknowledgement of data management in this section. For example, having the ability to provision sequential experiences that tie together. Starting a conversation on one channel, and continuing it on another, recognising returning users and having them continue from where they left off and things of that nature.

Agent escalation

“The ability to hand conversations over to human agents based on specific conditions or handing over from a human back to an AI agent.”

This is certainly a core requirement of customer service and support interactions. Ideally, the conditions alluded to in Gartner’s definition should include things like skill- or needs-based routing and queue prioritisation for specific users. For example, those that are identified as vulnerable.

It should also include the ability to transfer the details of the conversation, along with a summary to the human agent, so that the interaction can continue where it left off when escalated. For example, if a user has already been authenticated, you don’t want to have to re-authenticate them, or have them repeat the entire conversation.

This capability also requires integration with contact centre platforms to exchange data, as well as a reliance on the contact centre platform to be able to integrate escalated conversations into the agent queue and desktop.

Some CAI platforms offer their own live chat and escalation capability, others simply handover to contact centre platforms. I’d be curious as to whether Gartner considers the former, latter or both in this analysis.

Analytics, Continuous improvements

“The ability to collect performance and user behaviour data from conversations in real time, and to gain insight for reporting, oversight and improvement purposes. In particular, is the ability of an implementation to easily improve over time as more data is collected.”

For me, this doesn’t go deep enough into the analytics requirements for conversational agents. Likely because there are no standards for how to measure the performance of a conversational AI and every platform offers a slightly different flavour based on what they find important.

Also, to truly measure the impact of an AI agent or programme, you need to measure specific business-level data related to the use case, as well as customer journey-level data. This is typically custom for each business and use case, and will require conversational AI platforms to develop open analytics infrastructure that will enable developers to pipe-in data from other sources, and customise how that data is displayed.

Most businesses will use Looker, PowerBI or Tablau for this today, if they even do this at all (hint, they don’t), and most conversational AI platforms don’t make analytics a core platform component, but a necessary requirement.

For continuous improvement, this is a bit wooly for me. An implementation won’t just improve over time on its own. I know of a few companies that do have automatic labelling of data that hasn’t been classified by a model, and can automatically add this training data to the model, then auto-complete regression testing. However, this is not a common practice today and isn’t even strictly recommended.

Also, will only solve the language model classification problem. It won’t solve any issues related to conversation design and experience. For this, you need detailed turn-based analytics and the ability to run things like multivariate tests on changes made.

Bot orchestration

“The ability to orchestrate multiple bots across a single or multiple use cases. This includes collaboration patterns, simple bot pass through and escalating/routing to other bots.”

Although an emerging capability, this is certainly something that maturing organisations will need both today and increasingly in future. Even some of the major voice assistants like Amazon Alexa operate a multi-bot architecture behind the scenes. As businesses expand the scope of their AI assistant programmes, they’ll require the smooth transition between multiple agents and the tools to be able to manage this.

It’s worth noting that there’s more to it than this. The orchestration layer needs to be the ultimate manager of session context and have an understanding of what to do when.

Most of the successful orchestrated architectures pass every utterance and response through the orchestration layer. This requires specific management, memory and context tooling.

I’m not entirely sure whether this is what Gartner is considering here, or whether it’s the simple capability to pass a user from one dumb bot to another. That route requires lots of deterministic logic within each individual bot, which isn’t scalable.

Life Cycle Management

“The ability to operate in an existing technology architecture and to update, test, deploy and roll back with ease. It also highlights potential solutions to problems in training data or dialogue processes.”

It’s certainly critical that platforms provide the required tooling to embed the technology within a business, and that broader software requirements are adhered to, such as version control, testing and the like.

I’d bundle this capability with the other two below and call it Enterprise Management or Enterprise Readiness, as they are all related to the day to day deploying, running or operating of enterprise software.

Enterprise Administration

“The ability to administer users, roles, access, enterprise compliance and security aspects in the solution with ease and control.”

Again, certainly an important capability for enterprises that utilise the platform as their core AI toolkit and need to deploy the same system to multiple users across departments. As above, though, this really ought to be part of a single capability encompassing the last 3 in this list.

Deployment Options

“The ability to install/grant access to the platform and deploy applications on-premises, in the cloud or on the edge.”

Again, I’d put this under a single capability of Enterprise Management. I also would debate whether this is a critical capability. The world is a big enough place to still be a leading AI provider, even without the requirement to deploy on-prem or on the edge.

Agreed, that’s a requirement of some large organisations, but as more and more businesses move to the cloud, I envisage this being less of a requirement in future.

A note on scoring and weights

It’s worth noting that Gartner only weighs the Analytics capability at 5%. Voice is 15% along with Channels, NLU and Dialogue Management. Given most platforms rely on third-party voice capabilities, the critical capability right now is to integrate with them, not have them native. Therefore, I’d knock 5% off Voice and another 5% off Channels and make analytics 15%. You can deliver untold business value on one channel alone, but not without sufficient analytics.

Use Cases

Below is how Gartner defines the use cases on which it grades providers on. This really should reflect how well the above capabilities can be utilised together to deliver value for businesses.

Self-Service Chatbots

“Multichannel and multimodal conversational capabilities are critical for the success of self-service chatbot applications.”

Self-service chatbots are among the fundamental use cases for enterprise conversational AI platforms. What else are they for if not this?

In an era where customer expectations are through the roof, digital self-service channels like the web and mobile have only taken businesses so far. Most companies have more incoming demand than its people can handle, and so the ability to provide 24/7 self-service in a one-to-many fashion, across traditionally one-to-one channels, is crucial.

Multilingual Chatbot Initiatives

“Multilingual bot initiatives require CAIPs to offer NLP support for applicable languages, as well as expertise and functionalities that enhance localization efficiencies.”

In our globalised world, it’s imperative for businesses to be able to communicate effectively with diverse audiences. This enables global businesses to reach their global customer base, and enables local businesses to expand into new geographies. It also allows businesses to cater to the needs of their local markets where multiple languages may be spoken.

And, even though the proportion of chatbots that are multilingual today isn’t compelling enough for this to be a critical capability now, this will become a fundamental need for a growing number of enterprises in the near future, and so I think it’s a warranted use case.

ITSM/HR Virtual Assistants

“ITSM focuses on diagnostic dialogues leading to preferably automated IT issue resolution. HR focuses on automation of pertinent informational and transactional interactions.”

These internal specialist assistants are often a low risk place to begin an automation pilot. IT assistants, in particular, can facilitate faster IT issue resolution, and HR bots can streamline many routine tasks, from answering policy-related questions to booking leave. This enables the internal service organisations to benefit from streamlining processes and makes staff lives easier.

The only critique I have of this use case is that the capabilities required to deliver self-service chatbots are pretty much the same as those required to deliver this use case. Integrations and access may be restricted behind a firewall, but the fundamental mechanics are the same. And the goal of the use case is also the same: self-service.

I’d have liked Gartner to specify why this isn’t part of the self-service chatbot use case and the meaningful difference between the two.

Contact Center Automation

“These capabilities span support for multimodal conversations, out-of-the-box integration with contact-center platforms and conversation-analytics skills through to advanced agent escalation and agent assist functionalities.”

Along with self-service chatbots, this is the other real critical capability required to provide any kind of value to the enterprise. The contact centre is the lifeblood of organisations – the place where your business and customers meet – and so having a tightly-coupled integration in-and-out of contact centre systems is the only way to make your conversational AI programme a mission critical capability, as opposed to an appendage.

This is table stakes for an AI platform today.

Orchestration of Multiple Employee-Facing Bots

“This is the orchestration of many employee-facing bots for a variety of tasks, and the ability for each bot to be managed by different parts of the business.”

Although orchestration of bots is a critical capability for those scaling their efforts, I wouldn’t have constrained this to employee-facing bots. It’s just as, or even more important, for orchestration capabilities with customer-facing bots.

As businesses scale, and they move from a small team, to a centre of excellence, to a federated model, different teams across the organisation will manage different bots. Even different people from within the same team might manage different pieces of a single bot. Yet, all of this capability needs to be exposed on the front end to customers through a single access point, like a chatbot.

However, given that bot orchestration is listed as a feature i.e. a critical capability, I’m confused as to why it’s also listed as a use case. Which one is it?

Even the ability for bots to be managed by different members of a team or business unit, that’s part of what I would call the Enterprise Management capability, and isn’t a use case.

Gaps in Gartner’s analysis

Gartner’s critical capabilities and use cases for Conversational AI Platforms (CAIPs) cover a comprehensive set of features and scenarios, providing an extensive framework for assessing such platforms. However, like any evaluation criteria, it cannot encompass every possible feature or use case.

Some additional considerations for capabilities and use cases that might be worth exploring next year (and that buyers should consider today) are:

Critical Capabilities

- Large language models. This is a huge area that’s blown up since last November and likely wasn’t able to be incorporated into the analysis in time. Don’t be surprised to see this in the next critical capabilities assessment which I imagine Gartner is beginning to put together as we speak.

Some of the most notable capabilities conversational AI platforms need when it comes to incorporating large language models can be found in this article. Briefly, the two main areas of focus are a) using LLMs to enhance or replace intent-based NLU models and b) to aid in the creation of design requirements such as flows and dialogue.

Although Gartner did publish Emerging Tech: Use Generative AI to Transform Conversational AI Solutions, this looks at generative AI on its own and doesn’t frame it within the perspectives of these critical capabilities, which are still required to bring about success.

- Multimodal Interactions: While voice and text channels are considered, the assessment only mentions capabilities that combine multiple modes of interaction (e.g., voice, text, and visual) once in the ‘use case’ definition. I’d like to see some deeper requirements here regarding the capability to utilise a combination of channels and modalities and how each vendor provides for this from a system design and technical perspective.

- Emotion Recognition and Response: Advanced conversational AI can interpret and respond to user emotions. This feature can enhance user experience but is not explicitly covered by Gartner’s criteria.

- Identification and verification: For all high consequence and mission critical use cases where a business needs to know for certain that they are talking to the right person, ID&V capabilities are critical. I’d like to see an acknowledgement of how vendors are integrating this capability into channels.

- Dynamic chat widgets: The ability to customise the embedded chat widget on a website or app to utilise graphical UI elements from within the chat. For example, date pickers, sliders or even form fields for capturing open data that doesn’t require classification (qualitative customer feedback or names, for example). We’re mostly stuck in the land of widgets in the bottom right corner of websites, but there’s so much more potential to create more immersive and dynamic conversational experiences.

- Accessibility and Inclusion: The ability of an AI platform to cater to diverse user needs, including features for users with disabilities, is not highlighted. This is an area lacking in general in the industry. So much so that Nat West had to build their own chat widget to make sure it was WCAG compliant. Having this as a critical capability will raise the importance among vendors to create more inclusive tooling.

- Workflow and process orchestration: The ability to define and build specific workflows that thread together multiple stages of (or an entire) customer journey across time, touch points, conversations and modalities. You can probably do this with the leading platforms, but calling this out as a requirement will give a nod to the ability to provide more end-to-end journey automation rather than isolated conversations.

- Contextual Understanding: The ability to maintain the context of a conversation over an extended period, across different channels, and even when the user revisits after a gap.

- Proactive Engagement: The capability of the AI platform to initiate conversations or actions based on user behaviour, predictive analysis, or predefined scenarios.

Use cases

- Marketing and Sales: While contact centre capabilities and internal use cases are identified, there’s increasing interest in conversational AI on the marketing and sales side. This includes things like customer acquisition, lead generation, or product recommendations, which is a growing trend that’s not explicitly addressed.

- Product UI: Every piece of software used today has functions that can be addressed or called conversationally. From navigating websites to inputting data into CRM systems. All line-of-business and customer-facing systems can be conversational. Some of the conversational AI platforms, such as Cognigy, have already started implementing conversational UIs into their platforms themselves.

There’ll be a growing requirement for this capability to become customer-facing to enable platform users to create conversational UIs for their products, such as mobile apps, websites or even hardware. - Search: Since Gartner’s analysis, many of the conversational AI platforms have mobilised large language models to help with both enterprise document search and customer-facing search from within the chat interface. This will be an important use case for future assessments, as well as a future potential to break out of the chat widget to provide this capability native on websites, mobile apps and the like.

- Domain specialisms: Enterprises will wind up having their own language model. A model that knows their industry, use cases and company specific policies, process and procedures intimately. Though the particulars of an individual business can’t be forecasted, the use cases, conversational patterns and language model can be.

Many industries have the same or similar use case requirements. Checking your account balance in the banking industry for example. Booking a hotel room, changing your address, reporting an issue to your telco. The list goes on.

While domain-specific support is alluded to in the assessment, it warrants its own category, as providing businesses with the ability to get to value faster with domain-specific tuned models is critical for companies with fewer specialist AI resources or with an urgent need. So critical, that some conversational AI platforms specialise in only catering for specific industries, such as banking, credit and collections and healthcare. - Horizontal use cases: This includes out of the box, pre-built models and conversations for common use cases that can be used across industries and across business units. Things like appointment bookings, rescheduling, sending reminders, taking payments, identification and verification and the like.

Summary

Cognigy came out on top in every Use Case category, despite coming out on top in a few, not all, of the Critical Capability features. This speaks to the fact that features on their own don’t make your automation programme, or technology selection, a success. More important, is how you orchestrate and synchronise those features together to provide a holistic solution that can deliver what the enterprise needs, as well as cater for the varying needs, skills and roles in the teams who work on these initiatives.

I’ve witnessed the dedication and progress of the platform and the team at Cognigy over the years, and can appreciate what this means. Big congratulations to the team on this achievement. The leaders at Cognigy have a track record of providing enterprise-grade software in the shape of CMS systems prior to starting Cognigy, and so it’s no surprise to me that the same level of attention to detail and rigour has made its way into the Cognigy platform.

As I said previously, it’s no small feat to come out on top in an appraisal like this, especially when you’re up against the might of the likes of Google, Amazon and IBM. But here we are, proof that great design and a laser focus on enterprise and user needs prevails.

As far as Gartner’s analysis goes, I’d say it’s thorough enough, and fair. It covers the fundamentals that you’d expect, as well as some future-facing requirements that’ll become more important over the next few years. I would recommend changes and iterations as stated above.

The biggest omission by far is the inclusion of support for large language models and generative AI. It was likely too late to add this into the assessment criteria, as it was likely underway before November when ChatGPT sent the world into a frenzy.

Make no mistake, it’ll be there next year. But who will come out on top?

Anything we missed here? Any capabilities you think should be added? Answers on a postcard… Or in the comments.