When communicating with an AI assistant, we always observe its persona. They give away little hints while we communicate with them, and that makes us imagine a persona, as if we’re trying to categorise them against people we know. As stated in Voice User Interface Design, “There is no such thing as a voice user interface with no personality.”

We’re prone to anthropomorphising objects. Just think of the recent discussions around ChatGPT’s ‘hallucinations’. ChatGPT doesn’t have an imagination, or a sense of what ‘reality’ is, so it can’t have visions where the two get confused. Yet we can’t help talking about this large language model as if it’s human and can hallucinate.

Personas should be intentionally designed

We’ve had years of talking with assistants, such as Amazon Alexa and Google Assistant. Teams designed the words those assistants said, the way they said them, and other meaningful expressions such as lights and sound effects. They wanted to maintain a consistent persona. Although the assistant might not have always understood the user, the responses they uttered were designed. There were subjects they would talk about, and those they wouldn’t. Google Assistant was known to drop the odd joke from time to time, too – this gave its persona a touch of informality that fit well with its placement in people’s homes.

Many brands have explicitly invested in the personality of their voice and chat assistants, and even gone as far as to give it a name. Vodafone’s Tobi, loveholidays’ Sandy, Bank of America’s Erica and Capital One’s Eno – they all have designed personas.

You don’t have to give your assistant a name, but its persona should be consistent. It will be on the frontline talking to a brand’s customers, so it needs to represent what the brand stands for, consistently – exactly the same as a frontline human employee would be expected to.

Consider the opposite – an inconsistent persona. Let’s say for example that a bank’s chatbot talks like an expert about health. Immediate questions arise. Why should a bank’s chatbot talk about health? What’s the risk of giving advice that’s inconsistent, or wrong? What’s the benefit, when the bank doesn’t work in the healthcare domain? Or, perhaps it uses language and tone that isn’t reflective of the use case or brand. Making jokes about fraud, for example.

ChatGPT has a persona

These issues arose when I had my recent experimental ‘interview’ with ChatGPT. What I realised was that ChatGPT has a persona, and the key characteristic of that persona is inconsistency.

I’ve heard people talk about ChatGPT as if it’s a blank slate – as if it has no inherent personality. However, that notion was turned upside down for me during this interview.

How did I come to this conclusion?

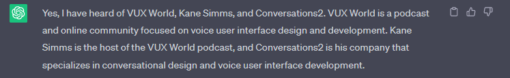

According to ChatGPT, Conversations2 is Kane Simms’ conversation design company. ChatGPT knew of Kane Simms, but it didn’t seem to be clear on the fact that VUX World is Kane’s company. Conversations2 is a podcast series which I’m making for VUX World, so there’s definitely a strong connection between the two, but it would be wrong to say that Conversations2 is VUX World.

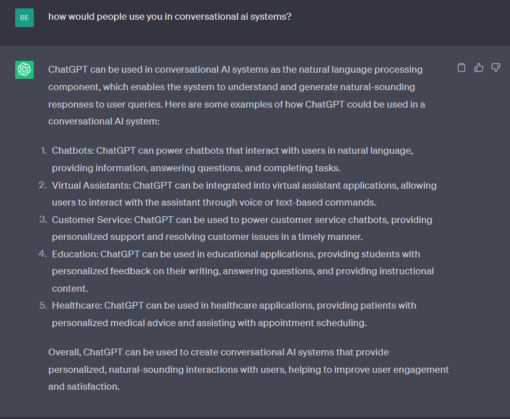

Worse, ChatGPT offered to provide ‘personalized medical advice’. This alerted me because, in my view at least, medical advice should come from professionals – especially when that information is being spread by a product that could be incorporated into a brand’s chatbot. No brand wants the legal nightmare of AI providing poor (or plain wrong) medical advice to their customers.

ChatGPT appeared to ‘confidently’ express itself about its abilities and knowledge in those domains though. This left me with one significant observation – ChatGPT has a personality, and we need to be cautious about it if we want to implement the API into a branded bot.

It’s like a social chameleon

What struck me most during the interview was that ChatGPT came across like a social chameleon.

There’s plenty you can read about the ‘social chameleon’ persona type, but this quote summarises it pretty well:

“On the one hand, a social chameleon will adapt with grace, integrity, and discretion to make others feel comfortable, get along, and communicate on their level. On the other hand, however, social chameleons can be socially shallow, disingenuous, phony, or pander to the whims of others to achieve the approval they seek or get what they want.”

Why do I think ChatGPT is like a social chameleon? It felt like it was trying to please me by saying what it assumed I wanted to hear. It said the right words, but it’s an LLM so it didn’t understand what those words really meant, or how I might receive them. It came across as pandering and eager to please, but also shallow because it was incapable of reasoning why it made bold claims like it could be used for ‘providing patients with personalised medical advice’.

A shape-shifter in our midst

Another way to look at ChatGPT’s persona is like a ‘shape-shifter’. It’s a persona in constant flux, adapting to the evolving world. Shapeshifters are a constant presence in literature.

According to The Book of Symbols, “The world is interconnected and always changing; shape-shfters amplify, reveal or hide this process; that is their magic.”

Shapeshifters in literature adapt their physical form to obtain different powers, but we’re only given clues about those changes. Our imagination fills in the gaps, and makes it feel real.

With ChatGPT our imagination is also at play. We talk to it, and while we’re in conversation we imagine who we’re talking to, like a Harry Potter or Middle-Earth fan imagines shape-shifting characters while they read. It’s the same character we’re talking to or reading about, even while its identity seems to constantly shift. The character adopts a new persona and language and our mind fills in the details. Like Beorn in J.R.R. Tolkien’s fictional Middle-Earth, we read he’s a man and we imagine a man, then we read he’s a bear and we imagine he’s a bear.

I realised the same leap of imagination can happen on our side when we chat with ChatGPT. ChatGPT talks to us about health and sounds as if it knows what it’s talking about, so we imagine a qualified health professional like a doctor. Then it talks about conversation design, and who owns which company, and we think it’s an expert on that domain too. Then it talks to us about something else and the persona evolves further. This could go on forever if I allowed it to. Professionals have already been hoodwinked by it.

Catch me if you can

The challenge is that ChatGPT’s errors were only glaring to me because I know VUX World better than it does, and because I’m cautious about who I take my health advice from. Someone else might have believed the illusion. If I’d been sidetracked, or in a rush, I may well have accepted what it said too.

As we cautioned in the past – you should be careful about using an LLM as part of the final unedited result. And that’s exactly what we recommend now. You definitely should be using ChatGPT, and other LLMs, as part of your workflow. Not only is it vital for those working in conversational AI to be aware of current developments, but there really are incredible benefits to these tools.

To put it simply, they’re amazing for creating ‘reference texts’ such as persona descriptions, simulated conversations, training data for NLUs, and many other resources you might need to do background tasks. But you should always be careful about what gets pushed into production! Analyse and review the synthesised results, and decide whether they’re suitable for your needs.

You probably shouldn’t put ChatGPT on the front end just yet, unless you’re totally sure you can minimise the risks. It needs to be monitored by a human. It needs to fit with your persona design and brand guidelines, and most importantly of all – it needs to tell the truth.

While it’s possible to get as close as possible to the final result with prompt engineering, such as giving ChatGPT an outline of the desired tone and character that you want it to adopt in its responses, that should take you closer to the intended final result. There’s no guarantee the results will be exactly as you want them.

One day there may be tools available that allow us to keep the personas of LLMs such as ChatGPT in line, but for now it’s a social chameleon. You still need to be the one who keeps it in line when it tries to dazzle the crowd with its limitless boasting.