People from all industries and professions have gotten a hold of ChatGPT and have begun to understand the potential power of NLP technologies. In the field of conversational AI, for obvious reasons, there’s been a LOT of talk about whether a future version of ChatGPT would negate the need for conversation designers.

The reactions from the conversational AI community could broadly be placed into two camps:

- I’m wetting myself with fear

- I’m wetting myself with excitement

There’s no need for either yet. You can keep your underwear on. And it’s right that we should be divided right now, because we really don’t know where this thing will go, how (if) it’ll be used in production enterprise use cases and how it will realistically affect what we do.

But we can speculate, based on what’s in front of us, the ways in which we could use ChatGPT to improve the practice of conversation design and development.

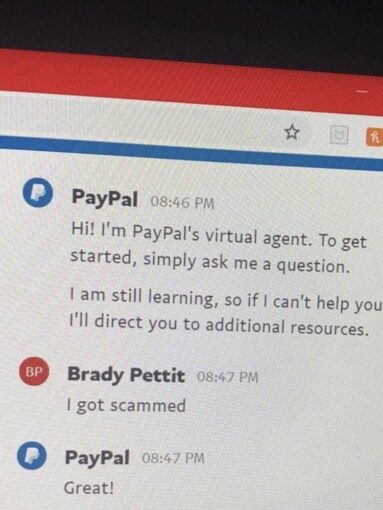

So how might you use it? How can we avoid mindless bots who say the absolutely wrong thing at the wrong time, purely because they weren’t designed properly?

Perhaps we’re about to have another “I got scammed – great!” moment when someone applies this new technology without really considering how it will work in the wild lands of the real world.

We need to keep our heads (and underwear) on, and think realistically about how we can use this.

Here’s 15 ways ChatGPT (and LLMs) could help you build better bots

Something to note before we get into this list. This list was written 3 days after ChatGPT launched. It’s taken us a little while to get this post out. At the time of writing, the below were simply ideas. Since then, the great team at Voiceflow has actually implemented a number of these things already in its recently announced AI Assist! Kudos to them.

- Happy path creation. Ask it to simulate a conversation and have it generate some sample conversation paths. Check out Maaike Groenewegge’s post on Medium for some examples.

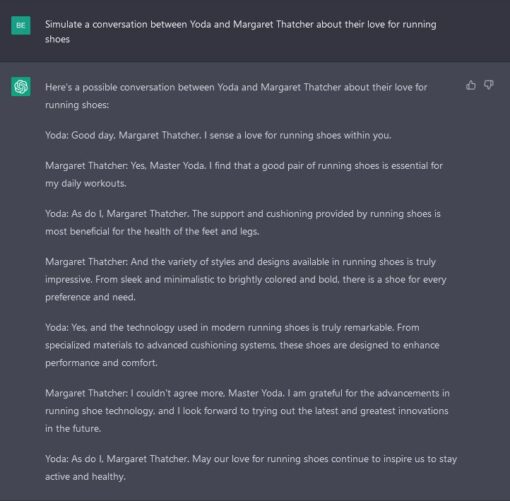

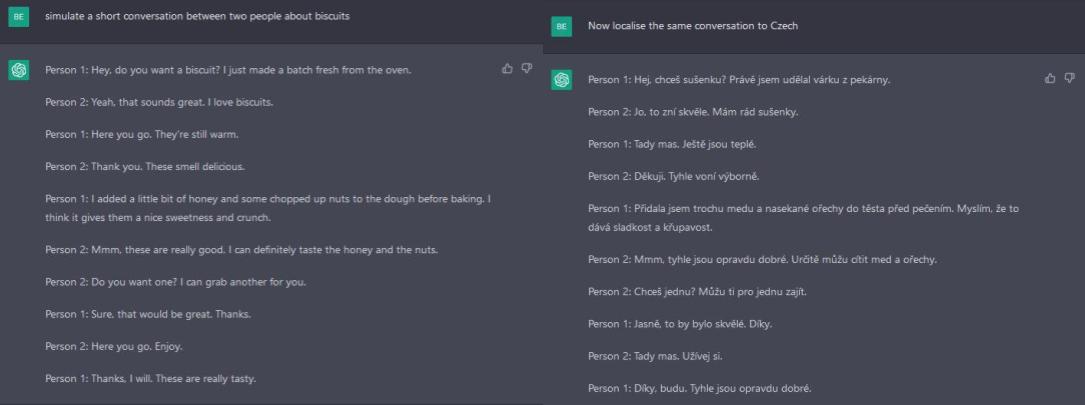

- Simulate a conversation between various parties. How might famous personas speak about specific subjects? This could be used as a reference in persona design.

It could be better, couldn’t it? Yoda’s first line is great, and Thatcher’s second line sounds good when said in a profound speech-making voice. Interesting to note that chatGPT didn’t attempt Yoda’s odd sentence structure, such as ‘Your path you must decide’

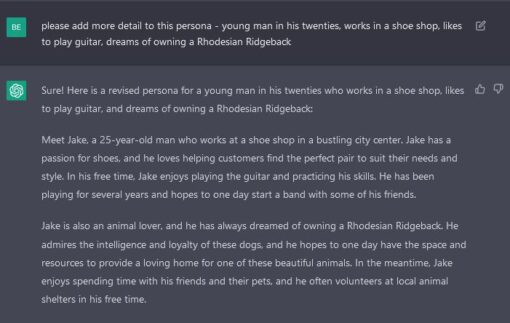

- Enhance a fictional persona. With a short prompt, ChatGPT can add a lot of wonderfully on-topic detail.

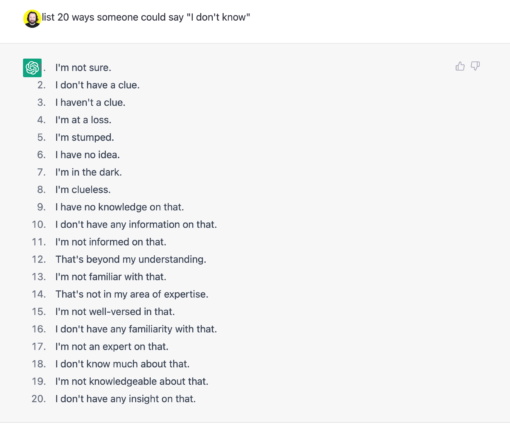

- Intent training. Ask it to list the different ways a user can say ‘I don’t know’ and you’ll get plenty of training data. Voiceflow has actually recently implemented this.

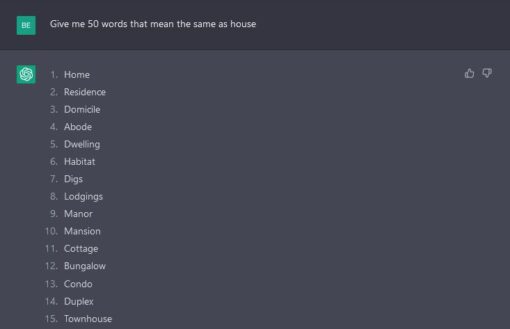

Beware of the risks though. There are some caveats here because someone might say “I’ve lost my bank card”, which may trigger a ‘freeze’ card’ followed by an ‘order replacement card’ intent. Simply asking ChatGPT to generate ways to say “Can you freeze my bank card” will miss those other alternative ways of triggering the intent. Therefore, we’ll still need real customer data to balance this. - Entity generation. ‘Give me 50 words that mean the same as house’ or ‘list 50 different pizza toppings’. Another Voiceflow recently implemented.

There’s the first 15

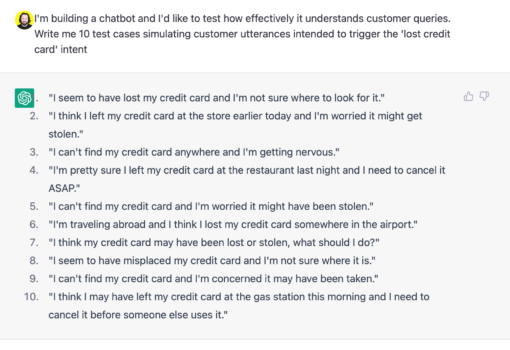

- Training and quality assurance. How about having it generate test cases? Ask it for test questions that aren’t in your training data to test your language model.

- Prompt writing. How best to ask x question? Check out this example of someone who used it to generate prompts to feed into MidJourney and create AI art.

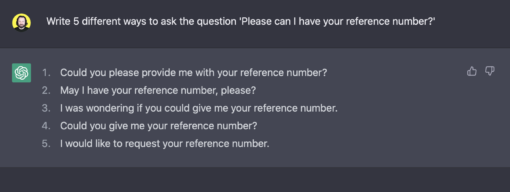

- Prompt variations. Random prompts that mean the same thing but keep the conversation dynamic, or are specialised for specific customers. Another that Voiceflow has recently implemented.

- Localisation. Create multi-language conversational designs before sending them to the loc department. chatGPT covers more languages than English. chatGPT’s first attempt should be conversational in any language (at least, it should be better than simply Google translating the source version), so your loc team’s starting point should be a higher quality localisation which will save them time

- Beating writer’s block. ChatGPT is an idea generator. If you simply want to try out an idea rapidly (‘what if we rap this sales copy?’), then ChatGPT will let you try that idea very quickly so you can decide if it’s a winner or a binner.

In the future, there’s potential for this thing to be able to do:

- Q and A. Ingest your company’s knowledge base and generate answers to questions. You could do this today with GPT-3, but the conversational improvements to ChatGPT make for more engaging natural responses.

- Fallback resolution. Imagine feeding your no-match utterances to ChatGPT (or any other LLM) and have it predict which one of your intents it belongs to. It would take the utterance, expand upon it to consider various things the user could have been saying, and then ask the user to choose from the top 3 options with most system confidence. You’d be able to cut down your optimisation overhead tremendously.

- Small talk. Hand off small talk questions to it. Now you don’t have to design bot responses to ‘what’s your favourite colour?’. Again, Voiceflow has recently implemented this, though not with ChatGPT. These work well enough at the moment, but lack context of the conversation and so once it’s responded to a query, the resulting prompts don’t get the conversation back on track as well as they will in future.

- Debug code. Imagine feeding it a buggy piece of code and asking it to find out what’s wrong with it, like this. This has been shown to work in some cases, but not proven to be 100% reliable as yet.

- Test code. Similarly, feeding it sample code and asking it to perform unit or regression tests on it.

Of course, the practical limitations to this today is that LLMs including ChatGPT still have the potential to turn racist and say something that’s abusive or generally not in line with how you’d like to speak with your customers, in spite of more recent restrictions put in place.

But let’s remember – ChatGPT is not available for production use (yet). In order for LLMs to achieve mass adoption they need to become embedded into existing tools before they will be used for any of the above. There’s too much friction today. They need to be part of your workflow and easily accessible and usable, which is why it’s encouraging to see the progress Voiceflow has made in recent weeks.

Where could this lead?

As this ‘mind blowing panel of talented professionals’ says, the future of conversational AI is going to be one where we give specialised services to each and every customer.

ChatGPT allow for that to happen, and when it is production-ready, it should allow teams building conversational AI to spend more time researching user needs and improving the conversational experience to give users what they need.

Each and every one of those customers is unique, and each of them has specific needs when they contact you. ChatGPT may well allow us to dynamically generate the best way to communicate with them depending on their circumstances (while keeping in mind the bot’s designed persona and the brand too).

You could say the tide of our entire industry will rise, and every practitioner will be able to make better experiences for every customer. ChatGPT and other LLMs won’t solve all issues, and they won’t replace conversation designers, but they should allow everyone to do better work.

All of this nudges our industry forwards. Conversation designers may become more curator than creator, leading a small army of bots to ensure that customers get the best service possible, because ChatGPT shows that computers can convincingly create human language.