When Open AI released their ‘Six strategies for getting better results’ in Dec 2023, anyone who has worked in conversational AI must have thought oh, that looks familiar!

The blog showed various techniques for working with ChatGPT. So many of the techniques are similar to the things conversation designers and programmers from the CAI industry do every day.

We’ve been aware of this crossover for a wee while already. During Unparsed 2023 there were many discussions around how conversation designers are best suited to help organisations exploit the potential of LLMs (Large Language Models). When Greg Bennett appeared on Conversations2, he spoke about how his team of conversation designers at Salesforce were designing prompts for use cases such as unique sales emails. While the similarities may be obvious to those already in the industry, it’s not obvious to the hundreds of millions of people who just started using LLMs.

There are a few differences too. I want to highlight the similarities and differences in this article. It should help anyone with experience in CAI to see what they should learn, as well as those who are just starting to work with LLMs to see how experienced Conversational AI practitioners would make great hires for their team.

Let’s look at what’s in Open AI’s recommendations, and how they relate to Conversational AI techniques.

Write clear instructions

Essentially, Open AI are saying without context or a proper brief, how can you get to the result you want?

The various ways they recommend to do that are:

Include details in your query to get more relevant answers – When designing conversations, we need to know certain things in order for the conversation to progress. It’s all about details. Whether a customer is ordering something, asking for advice, or asking an FAQ, Their needs can’t be resolved without knowing exactly what they want.

Conversation designers are used to writing so that specific details are shared, such as using the ‘escalating detail’ technique. In order for a conversation to progress towards the desired outcome, specific details must be discussed and agreed upon, otherwise misunderstandings can arise. That’s as relevant to ChatGPT as it is to designing a bot. For example, a bot saying ‘you’ve had suspicious activity on your account’ could lead to the user asking ‘is that my current account or savings’, whereas the bot saying ‘you’ve had suspicious activity on your current account’ shouldn’t have that issue. With specific detail, it’s easier to reach mutual understanding.

Ask the model to adopt a persona – conversation designers create personas for every bot. They may go about it in different ways, such as designing a super employee, a nuanced character that extends beyond words alone, or they let the persona be formed by their interpretation of user needs. These are just different techniques for arriving at the same result – a unique character that’s purpose-designed for the task at hand. No matter how far you go in designing a persona, you could say the main aim is to achieve consistency in the outputs, whether they appear in a bot or uniquely generated responses from an LLM.

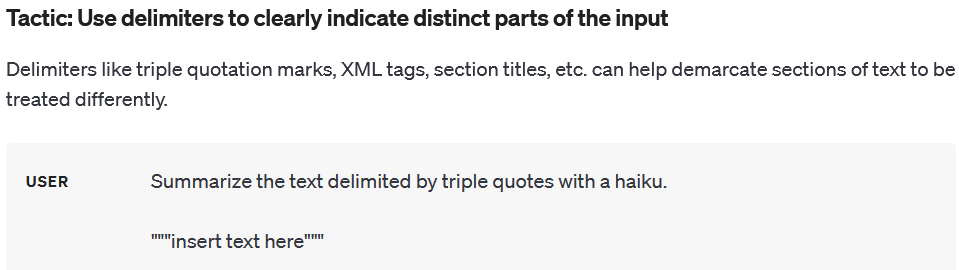

Use delimiters to clearly indicate distinct parts of the input – when working with ChatGPT, you’d do this to define which parts of the text should be treated differently (see image below). When designing a bot, we frequently have references to code within a bot’s responses. The user won’t see the code. It’s used to actively update the bot’s language with specific terms, such as a user’s name.

These are similar techniques because both rely on having a concept of what the final result should look like in your mind’s eye, and then formatting text in the right way to achieve that. We think in a structured manner. We do the same when working with entities – we think about what the system needs to function properly, and plan for that in advance.

Specify the steps required to complete a task – when designing a bot, you first define the use case, and then you research how people would carry out that use case (as well as what is practically possible with the technology being used). The output of that is a series of steps required to complete the task! That’s where every conversation design starts.

Provide examples – you need to understand the limitations of the technology. Just because you know what you want, doesn’t mean the technology perfectly understands what you’re asking for. Conversation design is all about dealing with technological shortcomings. Don’t get us wrong – what’s possible is incredible, and gets better every day.

The thing is, that in order to get a machine to have what appears to be a natural conversation, there’s a lot of unnatural processes happening in the background. Conversation designers often have to find ways to force a machine to understand what a human means, and that’s exactly why you’d provide examples to an LLM – to say ‘here’s the ballpark of what we’re aiming for’.

Specify the desired length of the output – this is straightforward. You know the result you want and where it will be used, and you know the limitations for the final format. That’s just like writing dialogue for a bot. Conversation designers are used to thinking about the normal rate of speech when designing for voice (around 150 words per minute) and not overloading users with unnecessary information when designing for chat. We also often have to write utterances to recommend to users to be more brief, as NLUs aren’t great at deciphering a user’s need when they oh well, eh… don’t clearly make their point. LLMs can understand users even when they’re being verbose, but it’s still important that we limit the length of the things they say to fit the intended use case.

Provide reference text

Open AI are saying; sh*t in = sh*t out.

You need to curate the materials that go into the LLM to refine the results. Just because an LLM can refer to the entire internet doesn’t mean it’s smart enough to know what’s true and relevant. They’re just prediction machines that act on the resources they’re given, with zero awareness of the real-world implications of what they say.

There have been recent high-profile examples of LLM-based chatbots going rogue when their inputs weren’t properly curated; Chevy’s bot was tricked into selling trucks for $1, or recommending people buy a Ford instead, and DPD parcel delivery service had issues with their bot dissing their brand too.

Open AI recommend you go about this in two possible ways:

- Tell the LLM to only generate an answer from the resources you give it (which you know to be correct)

- Ask it to provide an answer and support it with direct quotes from the resources you give it

Their recommendation also includes something that’s worth highlighting – ask the LLM to say when it can’t find an answer! As everyone who’s communicated with an LLM knows, it will try to hoodwink you into thinking it has the answer to everything.

This is a new challenge for people with experience in Conversational AI. Previously, we had to define what goes into the experience, but now we have to define up-front what to leave out.

Conversation designers always aimed to design conversations that were true, relevant, and up to date. Many took Grice’s maxims as a useful reference when writing, because they provided a simple structure for conceptualising conversations (that’s not to say they’re perfect).

However you go about it, you need to focus on curating your resources with a ‘single source of truth’ approach. You want the things your bot tells people to be useful and helpful. Always seek out reference texts that are most relevant to your needs.

Split complex tasks into simpler subtasks

Always refine information down to a simpler form, for reuse in the wider context

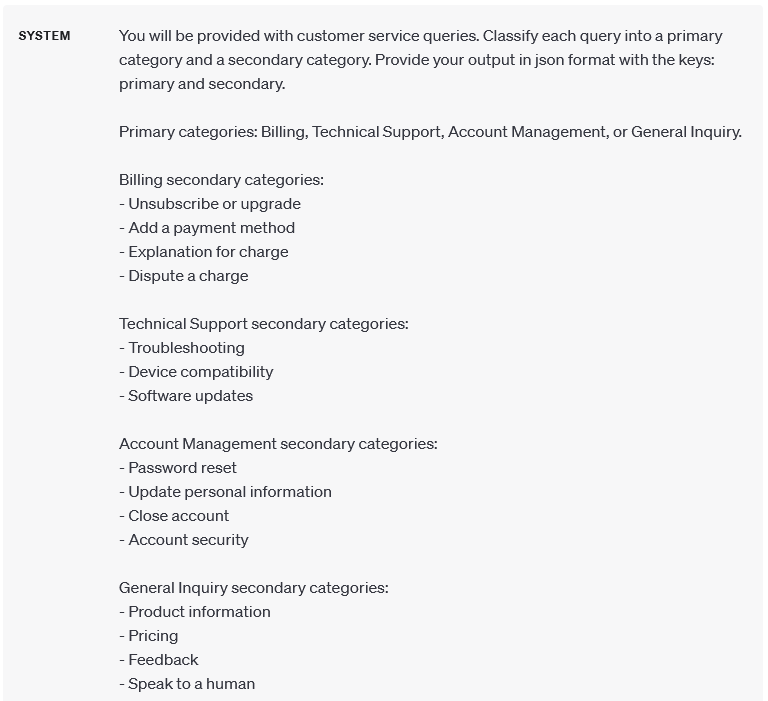

Use intent classification to identify the most relevant instructions for a user query – Open AI’s description of this is rather verbose, but it’s something that’s totally common in conversational AI. Perhaps they should have asked a conversation designer to write the description! As standard, we use intents to define all the things people might want to do. We use those intents to design the conversations. This is how we guide people towards outcomes – by understanding what they’re asking for (read up on NLUs if you want to see how that works). Open AI suggest you apply this technique to ChatGPT, by defining what someone might be trying to do, before deciding what the next steps should be. Conversation designers will no doubt see the similarity between the example given by Open AI and a list of intents that would be defined for a bot – they look the same!

For dialogue applications that require very long conversations, summarise or filter previous dialogue – this is about working within the limitations of the technology. This also highlights a key difference between your standard bot and an LLM; not many ‘pre-designed’ bots can handle long and meandering conversations (other than the likes of Kuki).

LLM based conversations, on the other hand, could theoretically go on forever, and discuss everything that’s possible to discuss. While you want it to feel like a seamless conversation for the user, in the background you have to have strategies for dealing with the system’s limits. Open AI recommend you use the LLM to summarise what has already occurred in the conversation to do that.

Before trying this technique I’d want to ensure no customer PII is being stored on Open AI servers, and that no detail is being lost in the summaries.

Summarize long documents piecewise and construct a full summary recursively – this is another technique that’s probably not common for people coming from Conversational AI. Most of us aren’t used to summarising entire books for use within a chat! This is probably more related to best practices when using stickies for ideation (which is common for conversation designers) – we write all our ideas on stickies in a burst of creativity, then we try to make each sticky a concise summary of the idea, and then we group stickies into themes and summarise those. According to Open AI, this is a good strategy for working with LLMs too.

Give models time to “think”

Critique the LLM like it’s another designer showing their thought process to you

Design choices shouldn’t be based on assumptions or poor data – you want to know you’re solving a real user need. Open AI recommends you mark the LLM’s homework to make sure it has good rationale for the choices it made. Ask ‘why did this input lead to that output’?

Knowing how to design conversational AI should make you more adept at checking the LLM’s process for errors and finding fixes.

Instruct the model to work out its own solution before rushing to a conclusion

Conversation designers will work with the client to understand their methods for solving problems (such as helping a customer troubleshoot issues with a product), because the final design will have to help people solve those problems too! This ‘best practice’ guides the design process.

Although it may be preferable to alter the method, if perhaps a better approach arises during development, the design team will always have an understanding of the client’s methods so that the development process is focused. Open AI are recommending a process that is very similar to this stage of the conversation design process.

Use inner monologue or a sequence of queries to hide the model’s reasoning process

This technique arrives at the same result that all conversational AI should strive for – to make the interactions feel as natural and seamless to the user as possible.

Showing the technology’s inner workings in most situations will only lead to confusion, as most people don’t understand code or know internal business processes, and only want to get something done so they can move on with their day.

Ask the model if it missed anything on previous passes

Again this leads to a result that great conversational experiences strive for – to give people the information they need for whatever it is they’re trying to do. If you omit certain details there will be issues, whether you’re sharing a recipe, steps for changing a tyre, or anything else.

The process of getting there is unusual though because usually conversation designers would be the first to blame if vital details were left out of a conversation, and now they’re checking up on the LLM instead 🙃

Use external tools

Open AI’s description here is really good – If a task can be done more reliably or efficiently by a tool rather than by a language model, offload it to get the best of both.

It’s totally common for conversational experiences to rely on APIs for information that informs the direction of the conversation, or to pass specific details to the user, or is stored on a record for later. It’s the most efficient way – we design the robust system that is fed with and feeds current data from other sources.

Use embeddings-based search to implement efficient knowledge retrieval

Again, this is about curating your materials (remember sh*t in = sh*t out). Open AI recommends you give the LLM resources that are trusted to be true, and those are used to train the model. Every time the LLM generates something, it only refers to those specific resources, so it should be more accurate.

Use code execution to perform more accurate calculations or call external APIs

Just because an LLM can create the illusion of being good at math, doesn’t mean that it actually is good at it! You can ask the LLM to run code to check a calculation rather than work it out by itself, which should be a more robust process.

Give the model access to specific functions

Essentially, this allows the LLM to carry out an action with an external app. That means it could send an email or buy something for the user. For conversational AI applications to be useful, and progress up the use case maturity scale to add more value, they will have to start letting users take actions sooner or later. Instead of simply ‘giving your model access to functions’, you should first define the process you intend to automate, and make sure you have the APIs and security in place to integrate properly.

As everyone who’s worked with bots knows, these are also times for explicit confirmations! Don’t let the bot assume it knows what the user wants – you want to explicitly confirm what you’re about to do with the user before enacting it. That’s a learning from the trenches of Conversational AI, thank you very much!

Test changes systematically

Make small changes and test them regularly – did they improve or worsen the result?

This is a conversation design best practice. You make small changes, and then test them to see if they improved the result. You might make a change with the best intentions and then find it makes things worse. When working with NLUs we need to do this regularly. We’re making living documents – a conversational experience is never ‘done’ and needs constant tweaking and updating.

Evaluate model outputs with reference to gold-standard answers

This is about having a ‘single source of truth’ approach, and knowing which details must be included. As already stated in this article – those are Conversational AI best practices.

In this case, Open AI are suggesting you ask the LLM to check its own homework. In theory that’s a more robust approach to getting a solid result. In reality, it needs a lot of testing to see how reliable it is. We know that models ‘hallucinate’ – how can we be sure they’re capable of reliably checking their own result for factual accuracy?

Conclusion

LLMs aren’t brand new. While ChatGPT is undoubtedly the most famous of them all, they’ve been around for years.

When you think about it, it’s not a huge surprise that there are such similarities between the techniques used in Conversational AI and the best practices when working with LLMs. They are conversational AI capabilities!

We’re talking about working with language within a computer system. While LLMs may look smart and exciting, remember that all they do is calculate the most likely word that should come next, and draw their results from the training data they’ve been given, as well as the user’s input. We’re talking about complex systems that create the illusion of being able to communicate naturally with humans – exactly like a chatbot or voicebot.

While some methods may be similar and some may be different, the concepts of how you structure materials and think about language are incredibly similar. People who have Conversational AI experience might not know every technique to use with an LLM, but they’ve got a huge head start in terms of thought processes!

Regardless of whether the experience is based on an LLM or an NLU, the aim is to create a great user experience that’s based around language. The conversation design skill sets, standards, theories, methodologies, processes and practices all remain the same. It’s just the technology that changes, but it’s not even a fundamental shift! We’re still working with a computer system that understands and responds to human language. At the end of the day, having a grounding in conversation design techniques is going to improve any bot, no matter what technology it’s based on. Our approach is as suitable to LLMs as it is to NLUs; even though they’re technically different, that doesn’t change the goals of conversation design, or the value of designers.